(An ongoing project...)

Image and Video Processing Lab, The Chinese University of Hong Kong

Recently successful 3D scene reconstruction algorithms mostly assume captured scene is static or slowly-moved, which limits the usage in natural environment. However with the increasing trend of 3D-TV/Free-View TV, there is a growing demand of proposing a 3D Dynamic Scene Reconstruction method in order to generate user-plausible 3D dynamic video.

However, traditional stereo-based reconstruction methods need high computational costs in generating depth information of given scene, which also suffers from strong noise and error. Fortunately several depth cameras, e.g., time-of-flight (SwissRanger4000) or structure-light depth cameras (Microsoft Kinect) are able to capture middle-resolution depth in video frame rate (15Hz ~ 30 Hz), and let real-time 3D scene reconstruction become available.

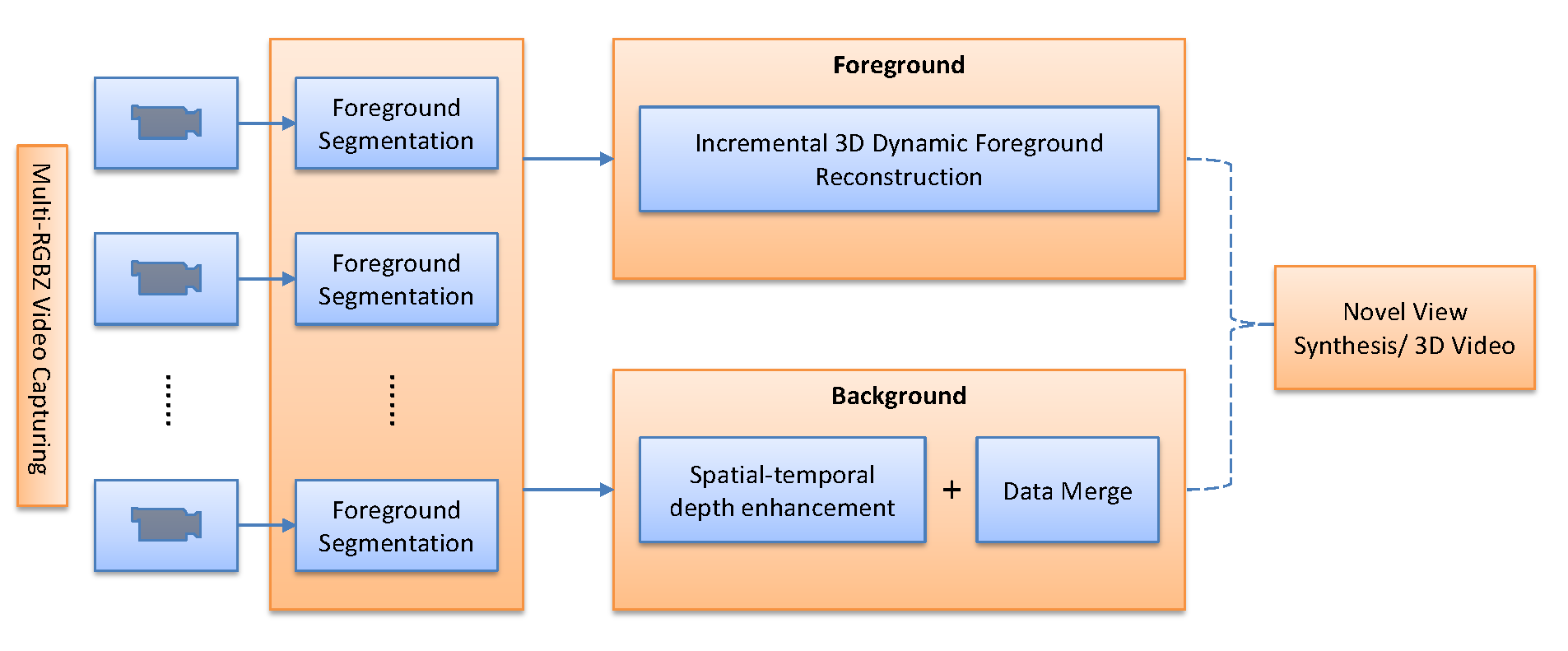

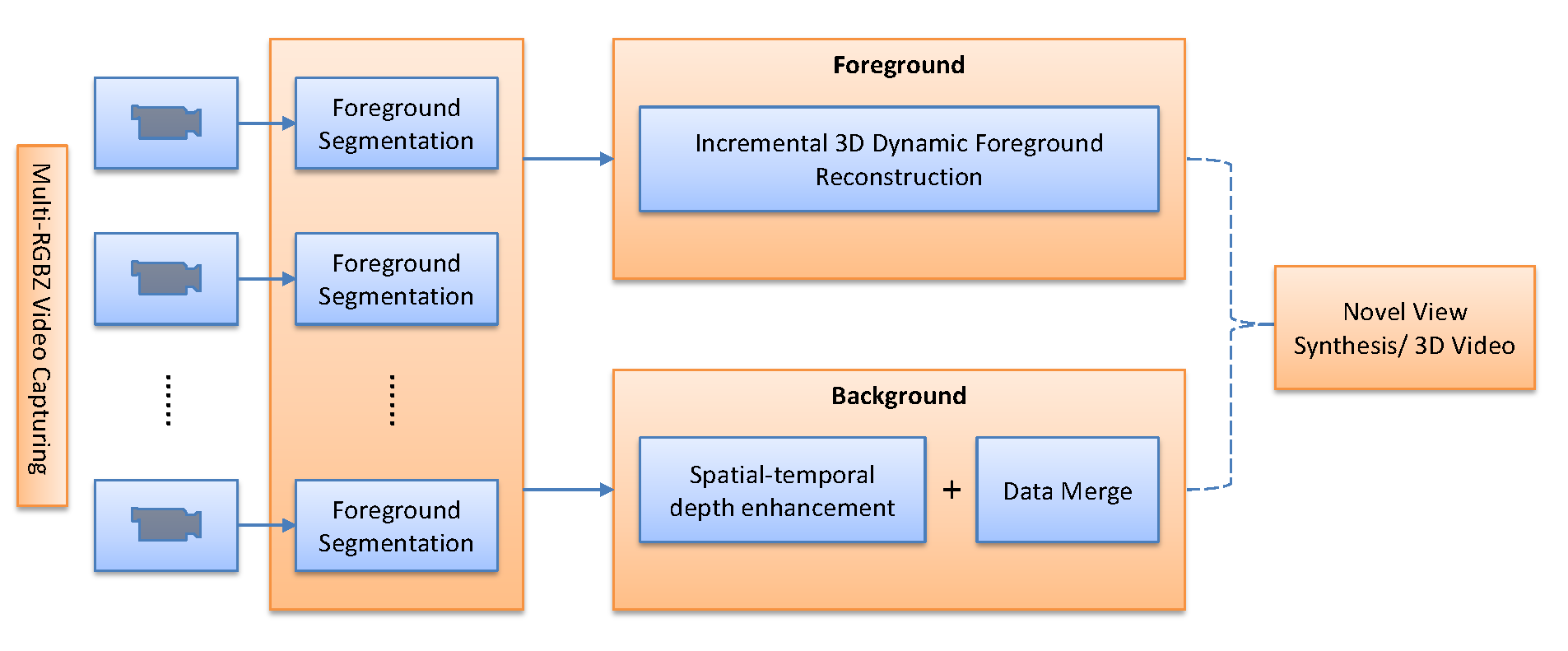

Figure 1. General System Framework.

In our research, we use Microsoft Kinect as RGBZ camera. At the current stage, we have done several primary work about the system setup.

To calibrate Kinect's intrinsic parameters, a modified Zhang's [1][2] method is used, for the purpose of accurately estimating systematic depth bias of depth sensors.

To calibrate multiple Kinect's extrinsic parameters, or registrate multiple Kinects, we use an approach combining the visual information from RGB camera and depth cue from depth camera.[3][4] In detail, we first use feature matching + RANSAC to estimate the initial pair-wise transformations. Then a Kinect-adaptive iterative closest point algorithm is used to further refine the results. From our experiment result, the performance is satisfactory.

Researcg works are being carried our focusing on several technical topics: Spatial-temporal depth enhancement, geometric occlusion/hole filling, accurate foreground segmentation, incremental foreground reconstruction and etc.

[1] Z. Zhang, "A flexible new technique for camera calibration", TPAMI, 22(11), pp. 1330-1334, 2000.

[2] Herrera C., D., Kannala, J., Heikkilä, J., " Joint depth and color camera calibration with distortion correction", TPAMI, 2012.

[3] P. Henry, M. Krainin, E. Herbst, X. Ren, D. Fox, "RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments", I.J.Robotic Res. 31(5): 647-663, 2012.

[4] Richard A. Newcombe, Shahram Izadi, Otmar Hilliges, David Molyneaux, David Kim, Andrew J. Davison, Pushmeet Kohli, Jamie Shotton, Steve Hodges, and Andrew Fitzgibbon, "KinectFusion: Real-Time Dense Surface Mapping and Tracking", in IEEE ISMAR, IEEE, October 2011